Dr. Hakim Hacid, Chief Researcher for the Artificial Intelligence and Digital Science Research Center at TII, discusses Falcon-H1 Arabic, the UAE’s pioneering large language model built specifically for the Arabic language. Leveraging a hybrid Mamba-Transformer architecture, advanced dialect coverage, and long-context reasoning, Falcon-H1 Arabic sets new benchmarks in Arabic NLP. In this interview, Dr. Hacid explains the technical innovations, linguistic strategies, and national priorities behind its development, and its potential impact on education, healthcare, legal systems, and digital governance.

Falcon-H1-Arabic moves beyond a transformer-only design by combining State Space Models (Mamba) with Transformer attention in every block, running both in parallel and fusing their representations. This hybrid design is intended to deliver linear-time scalability for extremely long sequences while preserving attention’s long-range modelling. For Arabic specifically, given its rich morphology and flexible sentence structures, this approach improves coherence and reasoning across extended text.

Falcon-H1 Arabic leads the Open Arabic LLM Leaderboard across all model sizes. Which technical or data-centric decisions were most critical in achieving this performance leap?

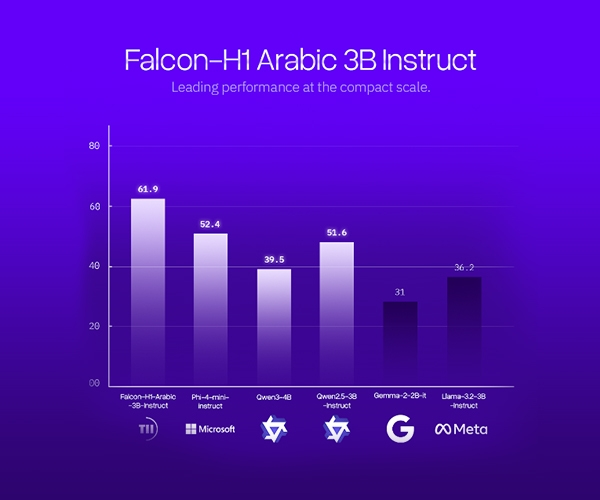

Falcon-H1 Arabic’s performance is the result of a combination of architectural change and training choices, rather than scale alone. At the core is a new hybrid architecture that combines Transformer attention with State Space Models (Mamba), allowing the model to scale efficiently over very long sequences while preserving strong reasoning. This is paired with a rebuilt Arabic-focused data pipeline, including higher-quality filtering, broader dialect coverage, and targeted post-training using supervised fine-tuning and preference optimization. Together, these decisions improved long-context stability, mathematical reasoning, and overall Arabic language understanding, enabling a 34-billion-parameter model to outperform significantly larger systems on Arabic benchmarks.

Source: TII

Arabic is linguistically complex and highly dialect-rich. How did your team address dialect coverage and cultural context without compromising model accuracy or efficiency?

Dialect coverage was a deliberate design choice. The training data was expanded beyond Modern Standard Arabic to include widely used dialects such as Egyptian, Levantine, Gulf, and Maghrebi Arabic. This ensured the model reflects how Arabic is spoken and written in real life. At the same time, careful data filtering and architectural efficiency allowed the model to maintain strong accuracy and performance, as reflected in its results on dialect and cultural understanding benchmarks.

The models demonstrate strong long-context stability up to 256K tokens. What new real-world use cases does this unlock for governments, enterprises, and researchers in the region?

A 256K-token context window allows Falcon-H1 Arabic to process hundreds of pages of text in a single interaction. This unlocks use cases that were previously impractical in Arabic, such as analysing lengthy legal documents, reviewing medical records, synthesising academic research, or working across large enterprise knowledge bases without losing context or coherence. For governments, enterprises, and researchers, this enables more reliable long-form analysis entirely in Arabic.

Falcon-H1 Arabic outperforms models several times larger. How important is model efficiency for sovereign AI strategies, especially in emerging and resource-constrained environments?

Efficiency is central to sovereign AI. Falcon-H1 Arabic demonstrates that high performance does not require ever-larger models, but can be achieved through architectural innovation and focused training. This makes advanced AI more practical to deploy across different environments, from large-scale infrastructure to more constrained settings, while maintaining national control over data and capabilities.

Beyond general benchmarks, Falcon-H1 Arabic excels in STEM reasoning and cultural understanding. How do you balance technical reasoning capabilities with deep linguistic and cultural alignment?

The balance comes from treating technical reasoning and linguistic understanding as complementary rather than competing goals. Falcon-H1 Arabic was trained on a broad mix of Arabic, English, and multilingual content to support STEM reasoning and general knowledge, while simultaneously improving Arabic-specific filtering, dialect coverage, and cultural evaluation. This approach allows the model to perform well on STEM tasks while remaining deeply aligned with Arabic language and cultural context.

From your perspective, what differentiates building an Arabic-first LLM from adapting multilingual or English-centric models to Arabic?

An Arabic-first approach starts with the language itself. It fills a critical gap in language technology enabling more natural, intelligent, and inclusive Arabic AI across the Gulf, Middle East, and North Africa. Rather than adapting a model trained primarily on English, Falcon-H1 Arabic was built with Arabic-specific data processing, filtering, and dialect coverage from the outset. This includes addressing Arabic morphology, orthography, and real-world language use. At the same time, maintaining multilingual balance ensures the model retains strong reasoning and domain breadth, rather than treating Arabic as a secondary capability.

Falcon-H1 Arabic enables advanced AI use cases in critical public-interest sectors by allowing institutions to work directly in Arabic, at scale and with long-form understanding. Its ability to handle up to 256K tokens of context supports tasks such as analyzing lengthy legal documents, reviewing medical records, synthesizing academic research, and navigating large government or enterprise knowledge bases without losing coherence. By combining strong reasoning, improved dialect coverage, and long-context stability, the model supports more accurate, accessible, and culturally relevant AI applications across education, healthcare, legal systems, and digital governance. This reduces reliance on translation, preserves nuance, and helps ensure that AI systems align more closely with how Arabic is used in real-world institutional settings.

TII has consistently ranked at the top of global AI benchmarks since 2023. What organizational or research practices enable sustained leadership at this level?

TII focuses on advancing foundational AI research, investing in architecture, data, and rigorous evaluation, while building AI capabilities that can compete at the highest global level. This work is driven by strong scientific leadership and the ability to attract and support top-tier researchers and engineers, enabling consistent progress at the frontier of AI.

Looking ahead, what is the next frontier for Arabic AI, and how does Falcon-H1 Arabic position the UAE to shape global AI development rather than follow it?

Our goal is to go even farther with Arabic AI. We want to continue to enable the integration of more dialects, especially low resources ones, in order to preserve them. Arabic must enter the AI space fully as a first class citizen, which means that all capabilities offered in languages such as English should also natively be available in Arabic. For example, stronger reasoning. Finally, Arabic should also enter the multimodality arena, and research should support these needs in a truly native manner.